From 8d91228f03f35c04305bf33d8817f21ef379776a Mon Sep 17 00:00:00 2001

From: Svavar Konradsson <svavarkonn@gmail.com>

Date: Tue, 27 Jun 2023 13:33:31 +0000

Subject: [PATCH] minor markdown fix in integration.md

---

docs/assignments/week08.md | 4 +++-

docs/final-project/integration.md | 2 +-

public/assignments/week08.html | 17 ++++++++++++++++-

public/final-project/integration.html | 2 +-

public/search/search_index.json | 2 +-

public/sitemap.xml.gz | Bin 217 -> 217 bytes

6 files changed, 22 insertions(+), 5 deletions(-)

diff --git a/docs/assignments/week08.md b/docs/assignments/week08.md

index 7dc1e1be..90d97b50 100644

--- a/docs/assignments/week08.md

+++ b/docs/assignments/week08.md

@@ -147,11 +147,13 @@ Then I tried the super thin 0.01 inch flat end mill, and I must admit that I for

{: style="width:100%"}*I broke two 0.01 inch milling bits trying to mill this test file.*

+### Eventual success with fine traces

+

I waited until the [final project](https://fabacademy.org/2023/labs/isafjordur/students/svavar-konradsson/assignments/week18.html#what-has-worked-what-hasnt) to try the 0.01 inch end mill again, then at the very slow speed of 0.1 mm/s. It worked for an hour and then broke in the middle of the night. I documented my frustration in my [final project video](https://fabacademy.org/2023/labs/isafjordur/students/svavar-konradsson/final-project/presentation.html) and in my final project presentation, Neil Gershenfeld mentioned that everything has to be perfect for this milling bit to work. You have to plane the wasteboard, clean the machine, everything has to be just right. And I think I also made the mistake of having it mill all the traces, instead of just around the ICs with the smallest pads.

{: style="width:100%"}*In the end I was able to mill the finest traces on my final project board with a V-bit. Then I cleared the whole board with a 1/64th inch flat end mill and milled the holes and outline with a 1/32 inch flat end mill.*

-Here is an assembled robot joint running a PID control loop:

+Here is the assembled robot joint running a PID control loop:

<video controls width=100%>

<source src="https://fabacademy.org/2023/labs/isafjordur/students/svavar-konradsson/final-project/images/pid_control.mp4" type="video/mp4">

diff --git a/docs/final-project/integration.md b/docs/final-project/integration.md

index 9264c490..93dae631 100644

--- a/docs/final-project/integration.md

+++ b/docs/final-project/integration.md

@@ -24,7 +24,7 @@ The night before my final project presentation, I assembled one robot joint and

<video controls width=100%>

<source src="images/pid_control.mp4" type="video/mp4">

-</video>

+</video>*Phew, it works!*

<style>

diff --git a/public/assignments/week08.html b/public/assignments/week08.html

index e2a57bbe..a43fd899 100644

--- a/public/assignments/week08.html

+++ b/public/assignments/week08.html

@@ -696,6 +696,13 @@

0.01 inch bit

</a>

+</li>

+

+ <li class="md-nav__item">

+ <a href="#eventual-success-with-fine-traces" class="md-nav__link">

+ Eventual success with fine traces

+ </a>

+

</li>

</ul>

@@ -951,6 +958,13 @@

0.01 inch bit

</a>

+</li>

+

+ <li class="md-nav__item">

+ <a href="#eventual-success-with-fine-traces" class="md-nav__link">

+ Eventual success with fine traces

+ </a>

+

</li>

</ul>

@@ -1068,9 +1082,10 @@

<p>Then I tried the super thin 0.01 inch flat end mill, and I must admit that I forgot to change the milling speed. So the first attempt was at a fast pace of 4 mm/s. The end mill broke immediately. Then I tried again at a slow speed of 0.5 mm/s and the same cut depth 0.1 mm. It also broke quite quickly. This was frustrating.</p>

<p><img alt="0.01 inch test Fab Modules" src="images/week08/test-010-modules.jpg" style="width:100%" /><em>There are more offsets, since the milling bit is thinner.</em></p>

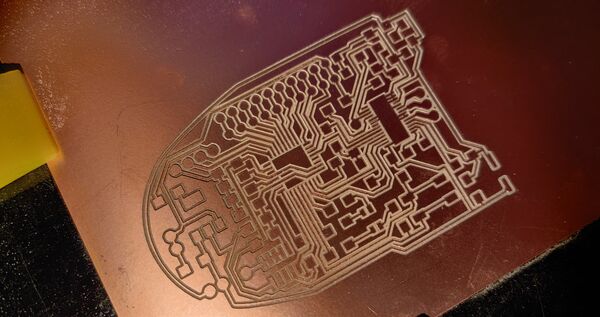

<p><img alt="Milling test 0.01 inch" src="images/week08/test-010.jpg" style="width:100%" /><em>I broke two 0.01 inch milling bits trying to mill this test file.</em></p>

+<h3 id="eventual-success-with-fine-traces">Eventual success with fine traces</h3>

<p>I waited until the <a href="https://fabacademy.org/2023/labs/isafjordur/students/svavar-konradsson/assignments/week18.html#what-has-worked-what-hasnt">final project</a> to try the 0.01 inch end mill again, then at the very slow speed of 0.1 mm/s. It worked for an hour and then broke in the middle of the night. I documented my frustration in my <a href="https://fabacademy.org/2023/labs/isafjordur/students/svavar-konradsson/final-project/presentation.html">final project video</a> and in my final project presentation, Neil Gershenfeld mentioned that everything has to be perfect for this milling bit to work. You have to plane the wasteboard, clean the machine, everything has to be just right. And I think I also made the mistake of having it mill all the traces, instead of just around the ICs with the smallest pads.</p>

<p><img alt="V-bit" src="https://fabacademy.org/2023/labs/isafjordur/students/svavar-konradsson/assignments/images/week18/beautiful_traces.jpg" style="width:100%" /><em>In the end I was able to mill the finest traces on my final project board with a V-bit. Then I cleared the whole board with a 1/64th inch flat end mill and milled the holes and outline with a 1/32 inch flat end mill.</em></p>

-<p>Here is an assembled robot joint running a PID control loop:</p>

+<p>Here is the assembled robot joint running a PID control loop:</p>

<video controls width=100%>

<source src="https://fabacademy.org/2023/labs/isafjordur/students/svavar-konradsson/final-project/images/pid_control.mp4" type="video/mp4">

</video>

diff --git a/public/final-project/integration.html b/public/final-project/integration.html

index 081d240f..2cbb53fd 100644

--- a/public/final-project/integration.html

+++ b/public/final-project/integration.html

@@ -886,7 +886,7 @@

<video controls width=100%>

<source src="images/pid_control.mp4" type="video/mp4">

</video>

-

+<p><em>Phew, it works!</em></p>

<style>

.md-content__button {

display: none;

diff --git a/public/search/search_index.json b/public/search/search_index.json

index a3c7fe02..166f5a51 100644

--- a/public/search/search_index.json

+++ b/public/search/search_index.json

@@ -1 +1 @@